Authors: Zhong-Qiu Wang, Samuele Cornell, Shukjae Choi, Younglo Lee, Byeong-Yeol Kim, and Shinji Watanabe

Abstract: We propose TF-GridNet for speech separation. The model is a novel multi-path deep neural network (DNN) integrating full- and sub-band modeling in the time-frequency (T-F) domain. It stacks several multi-path blocks, each consisting of an intra-frame full-band module, a sub-band temporal module, and a cross-frame self-attention module. It is trained to perform complex spectral mapping, where the real and imaginary (RI) components of input signals are stacked as features to predict target RI components. We first evaluate it on monaural anechoic speaker separation. Without using data augmentation and dynamic mixing, it obtains a state-of-the-art 23.5 dB improvement in scale-invariant signal-to-distortion ratio (SI-SDR) on WSJ0-2mix, a standard dataset for two-speaker separation. To show its robustness to noise and reverberation, we evaluate it on monaural reverberant speaker separation using the SMS-WSJ dataset and on noisy-reverberant speaker separation using WHAMR!, and obtain state-of-the-art performance on both datasets. We then extend TF-GridNet to multi-microphone conditions through multi-microphone complex spectral mapping, and integrate it into a two-DNN system with a beamformer in between (named as MISO-BF-MISO in earlier studies), where the beamformer proposed in this paper is a novel multi-frame multi-channel Wiener filter computed based on the outputs of the first DNN. State-of-the-art performance is obtained on the multi-channel tasks of SMS-WSJ and WHAMR!. Besides speaker separation, we apply the proposed algorithms to speech dereverberation and noisy-reverberant speech enhancement. State-of-the-art performance is obtained on a dereverberation dataset and on the dataset of the recent L3DAS22 multi-channel speech enhancement challenge.

Contents

We provide one sound demo for each of the following tasks (and datasets):

1. Monaural Anechoic Speaker Separation (1ch WSJ0-2mix)

2. Reverberant Speaker Separation (6ch SMS-WSJ)

3. Noisy-Reverberant Speaker Separation (2ch WHAMR!)

4. Speech Dereverberation (8ch WSJCAM0-DEREVERB)

5. Noisy-Reverberant Speech Enhancement (8ch L3DAS22)

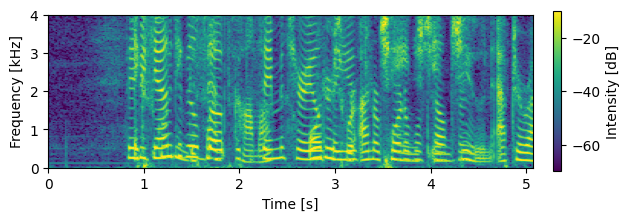

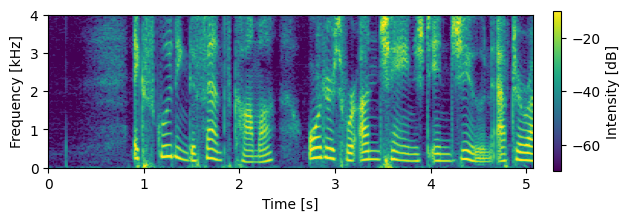

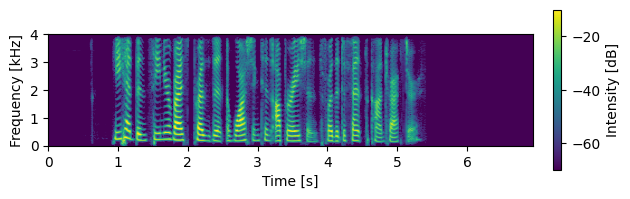

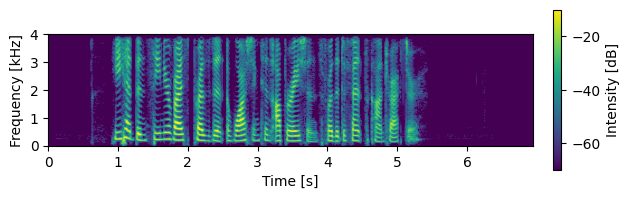

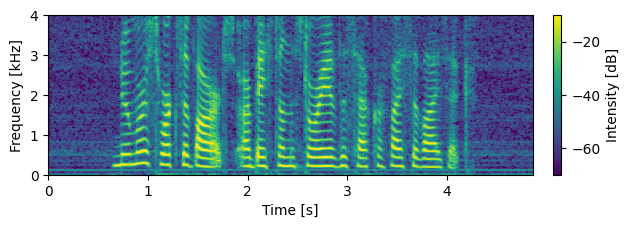

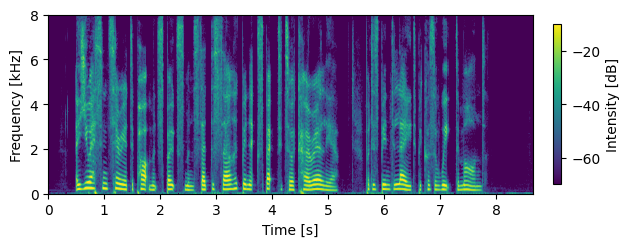

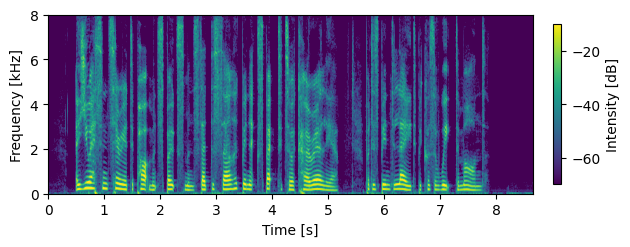

WSJ0-2mix (1ch)

Eval Example: 050a050l_0.96279_420o030g_-0.96279

|

Input Mixture: s0: SI-SDR = 0.2 dB, s1: SI-SDR = -0.6 dB

|

|

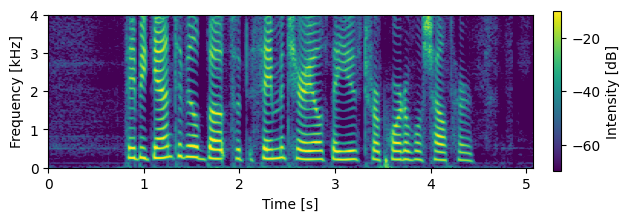

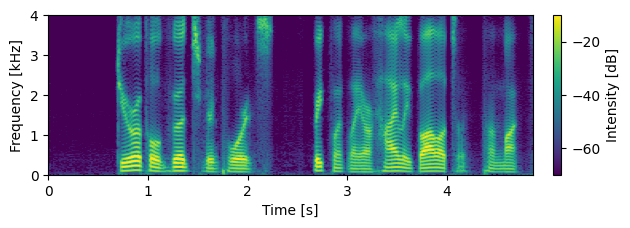

TF-GridNet (DNN1) Separated Source 0: SI-SDR = 19.6 dB

|

|

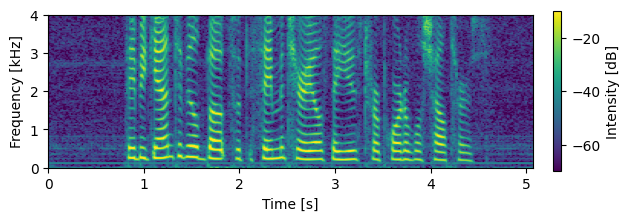

Oracle Source 0:

|

|

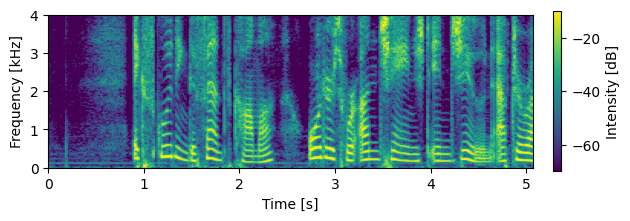

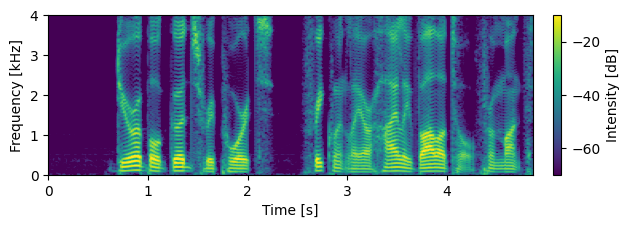

TF-GridNet (DNN1) Separated Source 1: SI-SDR = 19.2 dB

|

|

Oracle Source 1:

|

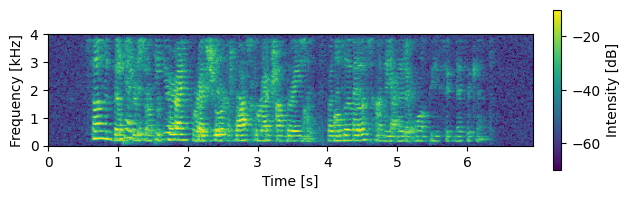

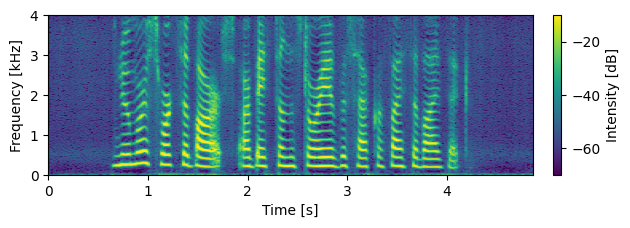

SMS-WSJ (6ch)

Eval Example: 102_445c0406_444c040r

|

Input Mixture: s0: SI-SDR = -7.5 dB, s1: SI-SDR = -6.7 dB

|

|

TF-GridNet (DNN1+MCMFWF+DNN2) Separated Source 0: SI-SDR = 22.2 dB

|

|

Oracle Source 0:

|

|

TF-GridNet (DNN1+MCMFWF+DNN2) Separated Source 1: SI-SDR = 21.5 dB

|

|

Oracle Source 1:

|

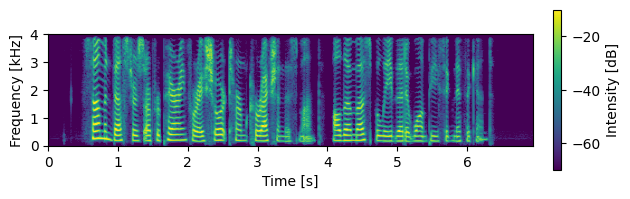

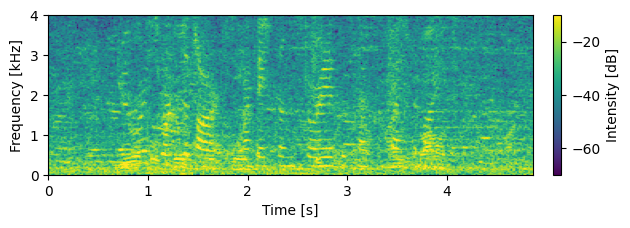

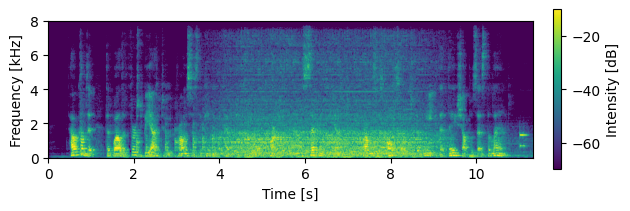

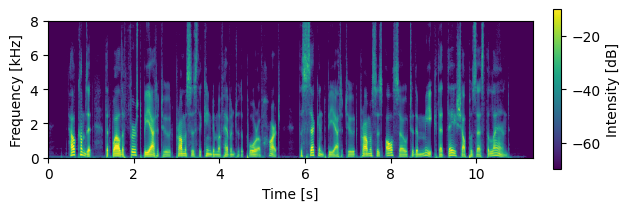

WHAMR! (2ch)

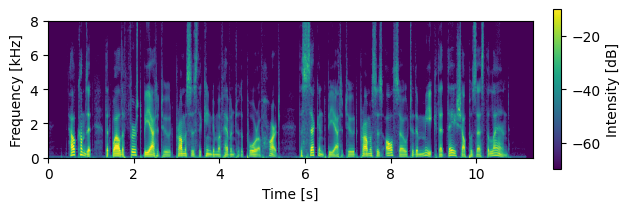

Eval Example: 050a0502_1.9707_440c020w_-1.9707

|

Input Mixture: s0: SI-SDR = -4.9 dB, s1: SI-SDR = -6.9 dB

|

|

TF-GridNet (DNN1+MCMFWF+DNN2) Separated Source 0: SI-SDR = 13.4 dB

|

|

Oracle Source 0:

|

|

TF-GridNet (DNN1+MCMFWF+DNN2) Separated Source 1: SI-SDR = 11.6 dB

|

|

Oracle Source 1:

|

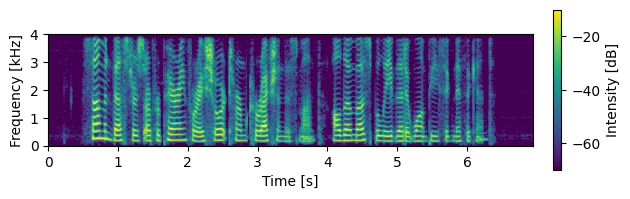

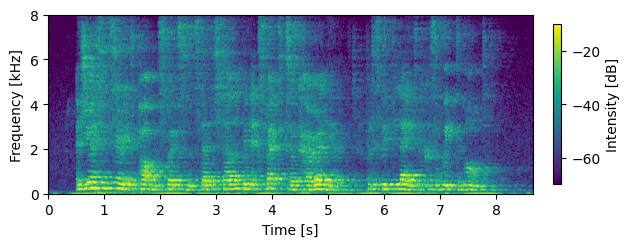

WSJCAM0-DEREVERB (8ch)

Eval Example: 000018_1.2955_0

|

Input Mixture: s0: SI-SDR = -10.2 dB

|

|

TF-GridNet (DNN1+MCMFWF+DNN2) Separated Source 0: SI-SDR = 20.4 dB

|

|

Oracle Source 0:

|

L3DAS22 (8ch)

Eval Example: 1089-134686-0022_A

|

Input Mixture: s0: STOI = 0.552

|

|

TF-GridNet (DNN1+MCMFWF+DNN2) Separated Source 0: STOI = 0.995

|

|

Oracle Source 0:

|